The Future is Calling: Why LLM Agents Are Your Next Big Investment (And Why We’re Betting On Voice)

By Alex Carter, Co-Founder & CTO of VoiceFlow Dynamics —Friday morning, 9:17 AM. My third espresso cools beside a half-scribbled roadmap as I scroll through yet another "AI will change everything" headline. But here’s the thing—it already has. And if you’re an investor still on the sidelines about LLM agents, let’s talk about why that’s a missed opportunity. Especially when it comes to the oldest, most human interface of all: the phone call.

4/2/20252 min read

LLM Agents Aren’t Just Chatbots (And Why That Matters for Your Portfolio)

Remember when GPT-3 blew minds by writing poetry? Cute. Today’s LLM agents—like AutoGPT, GPT-Engineer, and our own voice-native agents at VoiceFlow Dynamics—don’t just talk. They act. Need to book a dentist appointment? An agent can call the clinic, negotiate a slot, and text you the confirmation. Handling customer complaints? It’ll pull order history, process refunds, and escalate complex cases—all without a human tapping a keyboard.

The key differentiators?

- Autonomy: They break tasks into steps (like a junior exec with infinite caffeine tolerance).

- Tool mastery: They wield APIs, browsers, and yes, telephony systems like pros.

- Memory: They learn from every interaction (unlike my co-founder’s 65-year-old mentor, who still writes passwords on sticky notes).

From ELIZA to AI Calling Your Customers: A 60-Second History

Fun fact: The first "AI agent," ELIZA (1966), mimicked therapists by regurgitating scripts. Fast-forward to 2025:

- 2017: Transformers made AI grasp context (RIP, keyword matching).

- 2022: ChatGPT went viral, proving consumers want AI convos.

- 2023: Agents evolved from "chatty" to executive assistants—scheduling meetings, analyzing data, even coding.

- 2024: Voice became the battleground. Why? Because 42% of customers still prefer phone support (Forrester, 2024). That’s where we doubled down.

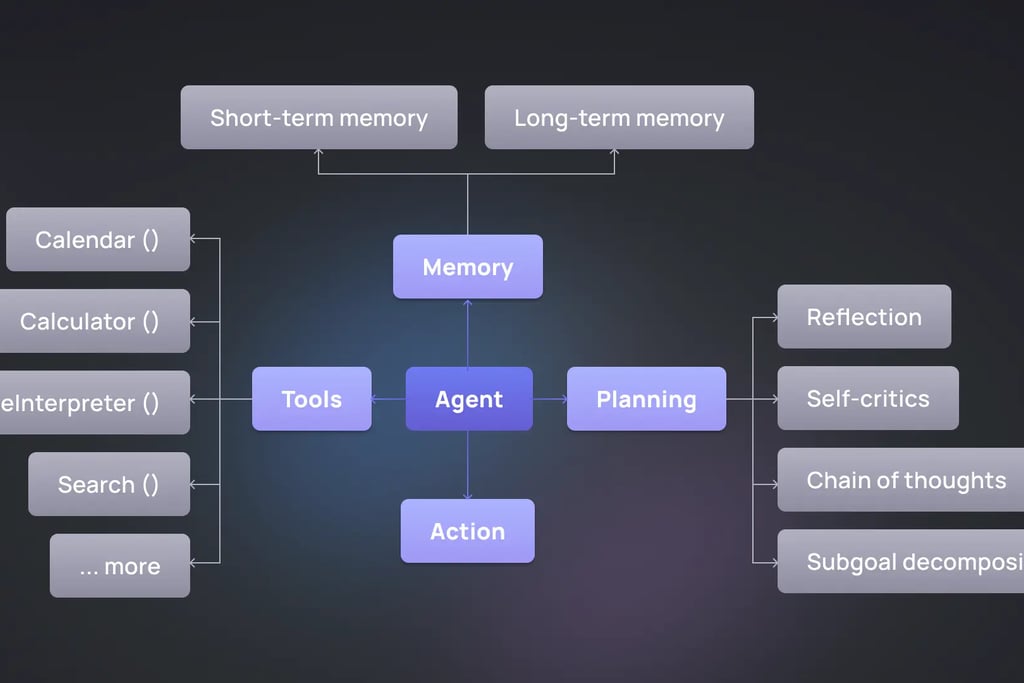

How LLM Agents Actually Work (No PhD Required)

Picture an agent as a Swiss Army knife with these modules:

1. Perception: Hears/reads inputs (even through static-y calls).

2. Planning: Decides whether to check your CRM, transfer to a human, or upsell.

3. Memory: Knows your customer’s last complaint (and their favorite sports team).

4. Tools: Dialer? Check. Payment API? Check. Empathy? Surprisingly, yes.

Real-world flow:

1. Customer calls, yelling about a late delivery.

2. Agent pulls order #, listens for frustration cues, offers a discount + tracking link.

3. If the issue escalates? Warm-transfer to a human with full context.

Where Agents Are Printing Money (And

Where Voice Fits In)

McKinsey’s $4.4T GenAI valuation isn’t hype—it’s already here:

- Customer Support: Zendesk’s AI cuts resolution time by 60%. But—phones handle high-value queries (disputes, sales). That’s our niche.

- Sales: Agents cold-call leads, qualify them, and book demos. (Our pilot boosted conversions by 22%.)

- Healthcare: AI nurses call patients for post-op check-ins. (HIPAA-compliant, of course.)

The voice edge? Human trust. A 2025 PwC study found voice interactions resolve issues 3x faster than chat—especially for Boomers and high-net-worth clients.

Why We’re Building Voice-First Agents (And Why Investors Should Care)

My co-founder (the serial entrepreneur with the SIP-trunking empire) likes to say: "People hang up on IVRs. They talk to agents." Here’s our thesis:

1. Phones are sticky: Even Gen Z calls for urgent issues (try disputing a charge via chatbot).

2. Agents scale intimacy: One human can manage 10 calls/hour. One AI? 10,000—with tone modulation to match moods.

3. Data goldmine: Every call trains the agent on accents, objections, and buying signals.

The Bottom Line

LLM agents aren’t "the future." They’re today’s ROI lever. And while everyone’s obsessed with text, we’re betting on the channel that’s survived 150 years of disruption: the humble phone call. —Alex

PS: Our Series B opens next month. Let’s grab coffee (or let our agent schedule it for you).

Lemur LAB

© 2025. All rights reserved

Partner with our AI research lab

Join us at the frontier of machine intelligence. Share your idea or challenge in the form, and we’ll reach out to co-create pioneering research, rapid prototypes, and real-world solutions—together.